We are about to venture into a heated and abnormal world. Hardware encoders, designed for real-time encoding, may be reaching the point they can also be considered for video archival. The three common consumers options available that we will look at are:

- AMD’s VCN encoder (using 6900XT / RDNA2 architecture)

- Nvidia’s NVENC encoder (using 3060 RTX / 7th generation)

- Intel QSV encoder (using i7-11800H / Version 8)

These tests will all use HEVC UHD HDR10 source material and have valid UHD HDR10 10-bit output as well. This is not a common use case. These tests have been done because these encoders are being added to FastFlix. FastFlix is a free and open source GUI for common video encoding software, specifically designed to help with HDR10 videos.

What’s so odd about using a hardware encoder for encoding videos?

When looking to compress an existing video file, one of the main purposes is to save disk space, i.e. lower the bitrate. This can help save on disk space or on bandwidth usage if the file will be transferred a lot. For example, a single megabyte difference for a popular file on a large site could start costing hundreds of dollars of bandwidth fees.

That means you want to get as much quality as you can, into the smallest package possible. Whereas, historically, hardware encoders were designed with the singular purpose to encode above real-time speeds. That way they could be used with video conferencing like Zoom or transcode videos as needed as you watch them. They always wanted good quality of course, but speed was always more important.

However, as their hardware and software matured it is now reaching a point where they can reasonably be considered instead of using crazy slow software encoders. I believe a large part of this is due to the introduction of the B-frame.

The almighty B-frame

In HEVC videos there are three types of frames, Index (I) frames, Predicted (P) frames, and Bidirectionally Predicted (B) frames. I frames are pretty easy to understand, an I frame is a full picture. It’s what everyone thinks about when they assume a video file is a bunch of pictures in a row, like it was back with real film.

However with modern video codecs, like HEVC, there are in-between frames that don’t have the full picture, instead are half filled with a bunch of math (motion vectors) that say “hey, move that area over this way for this frame.” P-frames do just that, they use the data in the frame before them and store the different as motion vectors.

P-frames

In idea scenarios, P-frames are about half the size of I-frames. That means if you have one I-frame and two P-frames after it for the entire movie, you’ve just cut off a third of the bitrate!

B-frames

B-frames are even more efficient, they can be half the size of a P-frame in an ideal world, aka a quarter of an I frame. Imagine having a single I frame, then two B-frames, a P-frame, then two more B-frames. You would have knocked off almost 60% of the bitrate! However, the problem there is that B-frames are crazy hard to calculate. B is for Bidirectional, which means they not only look at the frame that was encoded before them, but also the frame that will come after them. That’s right, you have to first encode the I frame, and the P-frame (or another I frame) that comes after it, before calculating the B-frames between them.

Until recently, hardware encoders were only thinking of moving in a forward direction. They never thought to stop to wait to build frames that came behind the current one, who would want that? Well without the B-frame, you have to either compensate by having more index frames or having larger P-frames. Take the following scenario.

If the B-Frame was replaced with a P-frame (so it was I P P I) the P-frame with the sun in it would require additional image data stored in that frame. Whereas by using a B-frame, it can be stored as a motion vector from the following frame, thus saving large amounts of bitrate.

Thankfully Nvidia and Intel have both decided that it’s time to bring some quality to the hardware world, and do have B-frames in their latest hardware encoders. Sadly, AMD still doesn’t have any support for it with HEVC videos.

Hardware Encoding Head to Head

This is probably why you’re here, to see how they stack up to each other. We are going to compare two videos against four different encoders. All encodes produced valid HDR10 videos and used the same settings per encode (except for one hiccup with Intel QSV erroring using –extbrc on one video.)

Methodolgy

Tests like this are only as good as their documentation of how they were acquired. To that end, I wrote a script to run all these tests so that there were no quarrels about how they were tested (downloadable here). All tests were run on Windows 10 Version 10.0.19042 Build 19042 with the following settings.

The NVENC and QSV encodings were done on a laptop while plugged in on maximum power mode. The AMD VCN and x265 encodes were done on a desktop PC. Because of obvious hardware differences, encoding speed and power will not be considered as part of these tests.

| AMD VCE / VCN | Nvidia NVENC | Intel QSV | x265 (Software) | |

| Options (bold are non-default) | –ref 3 –preset slow –pe –tier High | –bframes 3 –ref 3 –preset quality –tier high –lookahead 16 –aq –aq-strength 0 –multipass 2pass-full –mv-precision Q-pel | –quality best –la-depth 16 –la-quality slow –extbrc ( –extbrc not set on Glass Blowing) | -preset slow aq-mode=2 strong-intra-smoothing=1 bframes=4 b-adapt=2 frame-threads=0 hdr10=1 hdr10_opt=1 chromaloc=2 |

| Hardware | 6900 XT | 3060 RTX | i7-11800H | i9-9900K |

| Driver | 21.7.2 | 471.68 Game Ready | 27.20.100.9749 | 10.0.19041.546 (intelppl.sys) |

| Software | VCEEnc (x64) 6.13 AMF Version 1.4.21 | NVEncC (x64) 5.37 NVENC API v11.1 CUDA 10.1 | QSVEncC (x64) 5.06 Hardware API v1.35 | FFmpeg ~4.4 x265 @ commit 82786fc |

The x265 software was also given the benefit of running in dual pass mode. The slow preset was used as it was determined to be an ideal choice in previous tests.

The hardware encoders were set to use the “best” settings for measured quality, not perceived quality. I did not have as much time to test NVENC and QSV as I did VCN, so there may be more to eek out of those two encoders.

Downloadables

I wrote a python script to do all this testing, which can be downloaded: compare.py

If you want to check out the results of the VMAF / PSNR / SSIM yourself, here are the json files.

Wonderland Two – 4K HDR10 – 24fps – 51.4 Mb/s bitrate

First encoder comparison is with Samsung’s Wonderland Two 4K HDR10 video that is a time-lapse, where there are large chunks of the video that have small changes, while other parts have rapidly moving blurs. This one’s original bitrate is around 51,000k. We will be cutting it down to less than 1/20th it’s original size at 2500K, so there will be obvious quality lost. Let’s see how the encoders handle it!

Both NVENC and QSV put up a great showing, riding the VMAF 93 mark at 7500K, a mere 15% of the original file size. VCN doesn’t even reach that point at 12500K, so would presumably need around twice as much bitrate to achieve the same quality!

Let’s take a deep dive into what these scores really translate too over the course of the movie. These charts are the VMAF scores at every 10 frames for each of the encoders with their 10000k bitrate video.

In this case the video is compressed to 5 times smaller than it’s original file size! It went on a diet from 821MB to 160MB, so expect to see some big encoding degradation.

It’s really obvious that AMD’s VCN encoder struggles with scene changes (the sudden sharp drops). I imagine this is due to lack of pre-analysis, as talked about in the last post. It also seems that QSV has some trouble with it, but at least NVENC has it’s head held high in that regard! I also suspect VCN is also trailing behind due to lack of B-frame support.

Dobly’s Glass Blowing Demo – 4K HDR10 – 60fps – 15.1 Mb/s bitrate

Second, Dobly’s Glass Blowing Demo is a three minute long 4K HDR10 video that is constant motion with lots of changing details, fire, smoke and rain. It is a high fps source video, but has much lower bitrate to start from. That means the scores should be higher, as it’s easier to reach the same quality.

AMD’s VCN and Nvidia’s NVENC trade blows the whole way with this video, with x265 taking a clear lead throughout the curve.

Intel QSV starts off rocking both NVENC and VCN then …something… happens. I honestly thought it was an error with my testing at first, possibly a misaligned video track while calculating VMAF. However after a quick look at the spread chat, we can see it’s more insidious than that.

There are two huge drops with QSV. I checked the video file, and found that there were two sections that became suddenly blocky and laggy, as if it was skipping or duplicating frames wrongly. I have no idea what caused it, and worse there was no indication of error! I can only speculate the encoder was designed to keep working even if there was some disruption in either compute capability or access to the video file. That is good for real-time encoding like streaming, but unacceptable for video archival.

This frankly leaves QSV out of the running for any consideration of use for backing up videos. If anyone knows of fixes or prevention of this, please leave a comment!

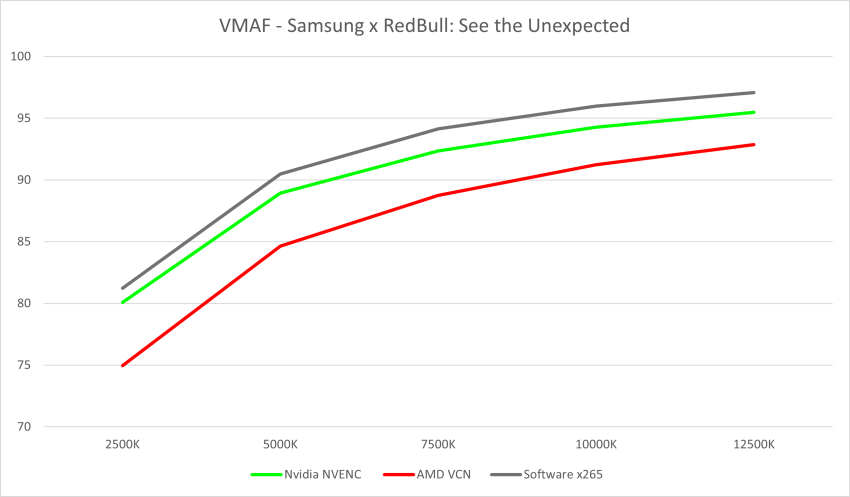

Samsung x RedBull: See the Unexpected – 4K HDR10 – 60fps – 51.8 Mb/s bitrate

Finally I tested with a high fps and high framerate video, Samsung x RedBull: See the Unexpected. I excluded testing QSV entirely with how bad it failed in the last run.

Nvidia’s NVENC is hot on x265’s tail, with VCN lagging behind.

AMD’s VCN still has clear drops. NVENC struggles more with this video than any of the others before, but still clearly pulls ahead of VCN. x265 is sitting tall, having a clean and very impressive line for a video that is a fifth of the original size with a minimum VMAF of 84.25 vs NVENC’s low of 56.13 then VCN taking rear guard at 48.16 (all had a max of 100).

Conclusions

Has HEVC hardware encoding caught up to the quality of software encoding?

No.

It seems that the three titans of the GPU industry still haven’t figured out how to build encoding hardware pipelines that are both fast and high quality.

Would I use hardware encoders for my own videos?

Yes.

Thee two Samsung tests were done on the extreme end of compressions, down to 1/20th of the original size. The Glass Blowing Demo VMAF deep dive gives a good idea of what would be more expected of a re-encode from going to 15Mb/s to 10Mb/s.

As we have said before, don’t needlessly re-encode videos. In my particular case I am happy using any of these hardware encoders for quick encodes rather that sitting around all day for a slightly, and probably unnoticeable, quality difference with x265.

Is there hope for a true consumer hardware encoding competitor to x265 quality?

No.

Why would there be? Everything available is “good enough” and there is no incentive for these companies to spend the phenomenal effort into this specific task.

I would absolutely love to be proven wrong on this, but I personally don’t see any improvements being invested on HEVC encoding when AV1 is right around the corner.

Disclaimer

These tests were done on my own hardware purchased myself. No company has not asked me to write this, modify or reword anything, nor omit anything. All conclusions are my own thoughts and opinions and in no way represent any company.

Is there any way to see some RF setting examples for this? I suppose omitted them for the purpose of a bitrate targeted test with 2-pass to compensate. It would be cool to see a chart showing the quality for some common RF values you’d use for 4k video, to quickly see if, say, RF18 CPU x265 is the size of RF20 GPU x265 if you’re targeting based on quality preservation (which is more common than bitrate targeting). I think that’s how you did it in a guide I came across from you way back.

GPU coding does not support RF AFAIK (pretty damn sure). Best you can do is to run a x265 RF transcoding of a sample, check the result bitrate and use this as reference with HW encoding. I did this with my VMAF tests.

CRF for GPU encoding is definitely supported in Handbrake, I just haven’t used the FastFlix encoder and tried with all the custom presets that optimize the B frames and such. FastFlix uses FFMpeg so it should have support for it.

Yes, you are right. my memory mislead me. I did my own VMAF tests (Handbrake, XMedia Recode, Fastflix, Pascal vs Turing vs x265) with target bitrates to get directly comparable results. You could do some yourself too, it was not difficult.

The test and featureI was witing for! I am just confused by the fact that HDR settings are only available in FastFlix with x265 and not with NVENC. Am I missing something?

HDR10 is not supported through ffmpeg’s nvenc, need to download / link NVEncC https://github.com/cdgriffith/FastFlix/wiki#hardware-encoders

Thanx! I`ve got it now.

I did some 4K HDR transcodes with rigaya`s nvenc & fastflix. I am impressed! This is the best free (and pro!) transcoder I met untill now.

My favs were xMediaRecode (lacks HDR) and HandBrake (8 bit internal pipeline limitation and no HDR), for HRD 10 bit Hybrid (GUI too complicated), even bought DVDfab Transcoder (nice GUI, superb HW support but no focus on quality at all). We nerer became friends with Staxrip. Amazin job you did!

the small messages in the “source” field at startup are great 😀

How about labeling your axis for crying out loud. Is higher or lower better, for BOTH AXIS?

Are these VMAF Drops still there in neuer implementations like Arc?